Artificial intelligence and confidential computing are in a seemingly irresolvable tension: on the one hand, AI poses a latent threat to confidential computing, but on the other hand, it can help in its implementation. The magic word for resolving this contradiction is Confidential AI. It describes a concept for dealing with AI that identifies the major uncertainty factors and makes them manageable. Data and data sovereignty come first. In addition, AI can be used in a targeted and secure manner to optimize collaboration and communication applications. VNC, a leading developer of open source-based enterprise applications, explains the key elements:

- Decentralized LLMs: Large Language Models (LLMs) typically run on the vendors’ proprietary hyperscaler platforms. What happens to the data there is unknown. Therefore, it makes sense to download the LLMs to your own platforms using appropriate toolkits (such as Intel OpenVINO). This way, both the data and the applications remain in your own data center and are protected from unauthorized access.

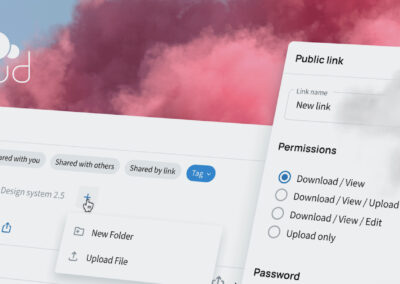

- Local AI for application optimization: Stripped-down models of these LLMs can be integrated directly into applications in dedicated software stacks. AI functions such as intelligent assistants can be integrated directly and transparently at any time. They are also potentially available to all applications in the same form across modules via modular platforms.

- Train models using local knowledge base: LLMs are usually trained with large amounts of data. By using LLMs locally, they can also be trained and optimized quickly, specifically and practically by querying the company’s own database. This also makes them transparent and auditable at any time.

- Acceleration through indexing: In addition, access to the local knowledge base can be accelerated by indexing the databases. Queries are no longer made against the entire database, but only against the indexes. This increases speed, availability and accuracy – and therefore productivity.

- Open source: Open source is the natural enemy of proprietary systems. In order to avoid vendor lock-in, not only should chronically non-transparent vendor-specific AI platforms (PaaS) be avoided for confidential computing, but open source should also be the first choice for AI applications at the software level (SaaS).

“Confidential AI gives companies and users back the sovereignty over their own data and applications,” emphasizes Andrea Wörrlein, Managing Director of VNC in Berlin and Member of the Board of VNC AG in Zug. “Artificial intelligence can thus be used in a secure, targeted, independent and practical way to increase productivity and job satisfaction.”

Andrea Wörrlein, Managing Director of VNC in Berlin and Member of the Board of VNC AG in Zug

About VNC – Virtual Network Consult AG

VNC – Virtual Network Consult AG, based in Switzerland, Germany and India, is a leading developer of open source-based enterprise applications and positions itself as an open and secure alternative to the established software giants. With VNClagoon, the organization with its global open source developer community has created an integrated product suite for enterprises, characterized by high security, state-of-the-art technology and low TCO. VNC’s customers include system integrators and telcos as well as large enterprises and institutions. Further information on https://vnclagoon.com, on Twitter @VNCbiz and on LinkedIn.

Contact

Andrea Wörrlein

VNC – Virtual Network Consult AG

Poststrasse 24

CH-6302 Zug

Phone: +41 (41) 727 52 00

aw@vnc.biz

Kathleen Hahn

PR-COM GmbH

Sendlinger-Tor-Platz 6

D-80336 Munich

Phone: +49-89-59997-763

kathleen.hahn@pr-com.de